Processing Oblique UAS Imagery Using Image Annotation

Introduction

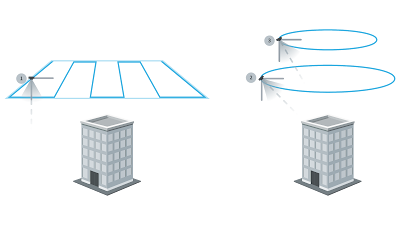

Often times when collecting UAS data, the imagery is taken at nadir, meaning the camera sensor is pointed vertically downward. This collection method is used when flying over mostly horizontal surfaces, where a top down view of the area is desired. When collecting data of something like the facade of a building, or a mostly vertical object, data collected at nadir will not have enough information to correctly model the vertical surfaces (see figure 1).

To capture enough information to correctly model mostly vertical surfaces, the sensor must be angled at some oblique angle usually between 30 and 60 degrees. Multiple passes in different directions must be flown to ensure full coverage; different altitudes and angles is recommended to achieve the best results. Figure 2 below shows the the difference between taking nadir imagery versus oblique imagery.

|

| Figure 2: Taking Nadir Imagery Versus Taking Oblique Imagery (Image sourced from https://support.skycatch.com) |

When oblique imagery is processed with nadir imagery, the best of both techniques is combined and the results are better than either technique alone. Figure 3 shows multiple passes of oblique and nadir images taken to create a 3D model of Michigan Central Station. Check out the Pix4D website linked here to view the interactive 3D model.

|

| Figure 3: Michigan Central Station Nadir and Oblique Imagery (Image Sourced From www.pix4d.com) |

What are the strong points and weak points of mapping in nadir vs. in an oblique fashion?

- Nadir

- The advantage of nadir imagery is that the mission can be laid in any orientation and as long as there is enough overlap, the same data will be collected in one direction as another.

- The disadvantage of nadir imagery is as stated above, it does not capture vertical surfaces.

- Oblique

- The advantage of oblique imagery is that it can capture vertical surfaces as well as some horizontal ones.

- The disadvantage of oblique imagery is that one has to fly at multiple passes in different directions, angles, and altitudes to get good data. Another disadvantage is that oblique imagery will capture unwanted background details and when processing, it will result in unwanted artifacts and noise to appear in the model.

To combat the issue of noise and unwanted artifacts, Pix4D came up with a solution called image annotation.

What is Image Annotation?

Image annotation as used in Pix4D Mapper is the process of cleaning up noisy datasets by removing objects and clarifying what is background and what is foreground, so that clean models can be generated.

At what phase in the processing is this performed?

Step 1, Initial Processing, must be complete before one can annotate the images however, one may also annotate the images after step 2, Point Cloud and Mesh then reprocess step 2 again.

What types of Image Annotation are there?

- There are three types of image annotation Mask, Carve and Global Mask. The Mask annotation tool classifies pixels in the image not to be used for processing. It allows the removal of obstacles that appear in only a few images such as a car captured in a few images as it moves down a road. Mask also allows the user to remove the background of an orthoplane as shown in a Pix4D help video linked here. Figure 4 below is a an image of the orthoplane masking process taken from the aforementioned video.

- The Carve tool removes the 3D points associated with the annotated parts of the image in the rayCloud. It also deletes the rays that connect the camera centers and the annotated pixels (see figure 5). This means that neither the 3D points, nor the annotated pixels are used for processing.

|

| Figure 5: How the Carve Tool Functions |

- The Global Mask annotation tool allows the user to annotate a specific region in the image to be excluded from processing in every single image throughout the dataset. Described another way, the tool removes the same region of pixels in every image and ensures that they are not processed. This tool can be helpful when one needs to remove the landing gear from each image in the dataset.

Methods:

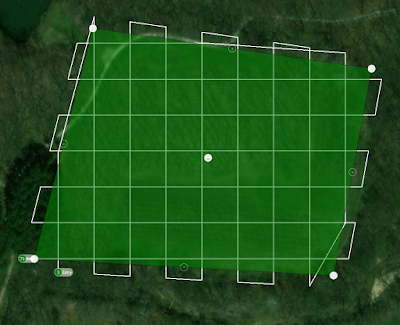

Before one can process the oblique imagery, one must first collect it. As discussed above, when collecting oblique imagery, one must be sure to have many different angles of the object from different sides and altitudes to ensure full coverage of the object. A suggestion would be to fly once at nadir then fly a circle around the object with the sensor at some low angle from nadir, then fly another orbit at a lower altitude with the sensor angled up higher. Repeat this circular pattern at lower altitudes and higher camera angles as necessary to gain enough information to create a model. Figure 6 below is a flight where the suggestion made above was used to gather the oblique data.

Once the oblique imagery is gathered, it will need to be initially processed, annotated, and post processed to remove certain noise and/or artifacts. The process for how to perform image annotation is documented on the Pix4D Support page by typing “image annotation” into the search bar or by clicking on the direct link here. Alternately, one can follow the steps listed below.

Step 1:

Follow steps 1 through 8 of my february 11th post changing step 7 to select 3D Models then start the Initial Processing.

Step 2:

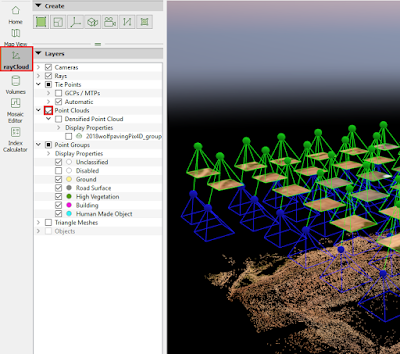

Once initial processing is complete, click the rayCloud icon located on the left sidebar then open the drop down for Calibrated Cameras located in the Layers area underneath Cameras.

Step 3:

Select an image and click on the pencil icon  on the right side of the screen and once it is done loading, select the appropriate annotation tool to use as discussed in the intro.

on the right side of the screen and once it is done loading, select the appropriate annotation tool to use as discussed in the intro.

*Note: A good rule of thumb is, if one would like to keep the background in the model, use the Mask tool. If one just wants the object of interest and nothing else, use the Carve tool. If one needs to remove something that is in the same place in every image frame, use the Global Mask tool.

*Tip: If areas of the object do not have many photos covering them from every angle and altitude, it may be better to use the Mask tool instead of the the Carve tool as the results will come out more cleanly.

Figure 7 below shows steps 2 and 3 of the process of how to do image annotation

Step 4:

Left click and/or click and hold down the left mouse button and paint around the area one wishes to annotate out. If one accidentally annotates something out that he or she wishes to keep, hover over the area until it becomes darker and then click and that area will be un-annotated again. Figure 8 shows the process of using the Carve tool to annotate an image of Professor Hupy’s Truck.

Step 5:

Repeat Step 4 on several (4-6) images taken from different angles and altitudes then uncheck Initial Processing, check the Point Cloud and Mesh box and click start.

Step 6:

Once Point Cloud and Mesh is finished processing, inspect the results and adjust the annotation method as needed to clean up the 3D model.

Discussion / Results:

Using the information above, two datasets were annotated. The datasets that were annotated were of Professor Hupy’s truck and, a light pole (see figure 9).

The annotation tool used for each of these datasets was experimented with and it was found that the Carve tool worked best for Professor Hupy’s truck while the Mask tool worked better for the light pole.

Professor Hupy’s Truck:

The Carve tool may have worked better than another tool on the truck dataset because it removed the extra background from the rayCloud making it easier to process. Video 1 below is a video orbiting the truck, viewing the truck from multiple angles.

Video 1: Professor Hupy's Truck Pix4D Results

Overall, the results of Professor Hupy’s truck look relatively good however there are two main problem areas. First, there are not enough images taken at a low enough altitude to gather more of what is below the truck and as a result there are extra blobs connected to the tailgate and underside of the truck that shouldn’t be there (see figure 10). Second, areas that are reflective or transparent such as the truck paint and window glass become very distorted as shown in figure 11.

To improve the data and combat the extra blobs underneath the truck, more orbits around the truck are necessary at a very low altitude with the camera -- almost horizontal. As for the reflective and translucent surfaces, there is not much that can be done unless the images are collected in a grey room with diffused light so that there are less erroneous reflections for Pix4D to use.

What objects seem to be the most difficult to truly capture in 3D - speculate why.

Looking at the 3D model of the truck, it seems as though anything that is reflective or transparent is very difficult to capture as they are see-through or have reflections that change from image to image, making it almost impossible to correctly generate 3D points.

The Light Pole:

The light pole as mentioned above was processed using the Mask annotation tool. The Mask annotation tool was used in this case over the carve tool because not enough 3D points were created on the light pole alone to just use it. The Mask tool made it easier to distinguish the foreground from the background and clean up the 3D model while simultaneously allowing the background to remain. Below is video 2 showing a virtual flight around light pole.

Video 2: Lightpole Pix4D Results

Looking at the video above, the results look mostly good with some errors and missing sections. The area of the pole below the lights was missing probably due to there not being enough images of that particular area, due to the lights and crossbar obscuring the view of the pole in certain images.

In addition to the missing sections, 3D points of the ground surrounding the light pole were mistakenly grafted to the pole, lights and light support crossmember; when the triangle mesh was visualized, the extra unwanted blobs were added to the structure (see video 3).

Video 3: Lightpole Blobs

To improve the missing sections mentioned above, an additional flight lower in altitude around the light pole with the camera horizontal could help Pix4D have enough data to create 3D points in that area.

Conclusions:

With the addition of oblique imagery to a dataset, the images may be annotated, less noisy 3D models of an object may be created and used in a professional setting, to convey the object from a more natural, human point of view, allowing the client to better grasp and interact with the information displayed.

then click around the perimeter of the area of interest making sure to error wide of the edge so that the measurement tool can gain an accurate base measurement to work with and accurately determine the volume above that base plane. *Note: When clicking, use the scroll wheel to orbit around the area of interest to help determine what should be included in it.

then click around the perimeter of the area of interest making sure to error wide of the edge so that the measurement tool can gain an accurate base measurement to work with and accurately determine the volume above that base plane. *Note: When clicking, use the scroll wheel to orbit around the area of interest to help determine what should be included in it.

.

.